Abstract

Why we research visual relationships?

Visual relationships connect isolated instances into the structural graph. It provides a dimension in scene understanding, which is higher than the single instance and lower than the holistic scene. The visual relationships act as the bridge of perception and cognition.

What have we done in visual relationships?

From the phrase detection to the scene graph generation, we have a clearer data standard and task for representing relationships.

What next to visual relationships?

After the representation of visual relationships, we should use the relationship information and build the bridge from perception to cognition. More than given the correct scene graph, the relationships should go further in the semantic and play the actual role in scene understanding.

Why the applications in visual relationships stuck?

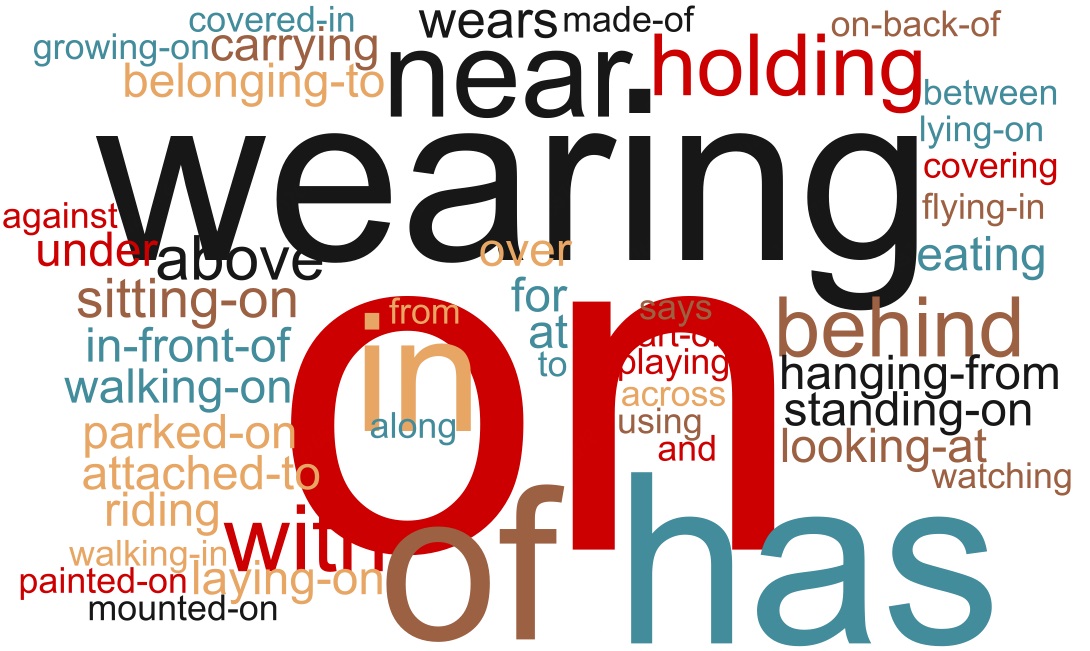

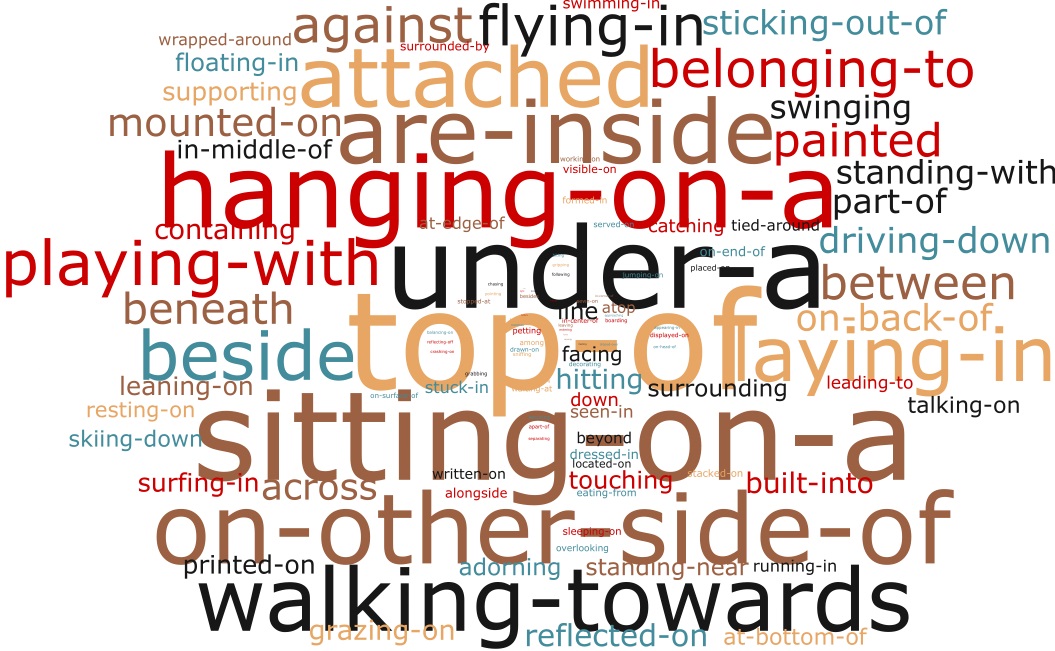

Rather than the method side, more problems exist in the data side. Before designing methods on how to learn, we should figure out what to learn first.

What should be learned in visual relationships for cognition?

Visually-relevant relationships! The visually-irrelevant relationships like spatial relationships and low diversity relationships degrade the relation problems to detection or deductive reasoning. Those visually-irrelevant relationships pull back the relationships inferring to the perceptive side. To take advantage of relationship information for semantic understanding, Only the Visually-relevant relationships should be learned!